Cold Start

Analyzing cold start on serverless functions

1. Introduction

Serverless computing has emerged as a revolutionary paradigm in cloud computing, offering developers the ability to build and run applications without managing the underlying infrastructure. However, this model introduces unique challenges, particularly in terms of performance variability due to the “cold start” phenomenon.

This study aims to provide a comprehensive analysis of cold start times across major cloud platforms, investigating the factors that influence these delays and offering insights to optimize serverless function performance.

1.1 Cold Start in Serverless Computing

A “cold start” occurs when a serverless function is executed in a newly created container, as opposed to reusing an existing warm container. This process introduces latency that can significantly impact application performance, especially for latency-sensitive applications.

The typical steps involved in a cold start are:

- Server allocation

- Container setup with specified runtime

- Loading of required dependencies and packages

- Loading of function code into memory

- Execution of function handler code

In contrast, a warm start only involves the last step, as it reuses an existing container.

1.2 Significance of the Study

Understanding and mitigating cold start issues is crucial for:

- Optimizing application performance

- Improving user experience

- Managing costs effectively in serverless environments

- Designing efficient serverless architectures

2. Background

2.1 Serverless Computing Landscape

Serverless computing, also known as Function-as-a-Service (FaaS), has gained significant traction since its introduction. Major cloud providers offering serverless platforms include:

- Amazon Web Services (AWS) with Lambda

- Google Cloud Platform (GCP) with Cloud Functions

- Microsoft Azure with Azure Functions

2.2 Previous Research

Several studies have explored cold start times in serverless environments:

- Manner et al. (2018) investigated factors influencing cold starts in FaaS platforms.

- Jackson and Clynch (2018) examined the impact of language runtime on serverless function performance and cost.

- Cordingly et al. (2020) analyzed the implications of programming language selection for serverless data processing pipelines.

Our study builds upon this foundation, providing a more recent and comprehensive analysis across multiple platforms.

3. Methodology

3.1 Platforms and Tools

- Cloud Platforms: AWS Lambda, GCP Cloud Functions, Microsoft Azure Functions

- Development and Deployment: Serverless Framework

- Monitoring and Logging: AWS CloudWatch, GCP Cloud Monitoring, Azure Monitor

3.2 Experimental Setup

3.2.1 Function Implementation

- Implemented a simple HTTP-triggered function that returns a “Hello, World!” message

- Developed versions in Python, Node.js, Go, and Java

- Created variations with different memory allocations and dependency loads

3.2.2 Deployment Configurations

- Deployed functions with and without VPC integration (where applicable)

- Utilized different memory allocations: 128MB, 256MB, 512MB, 1024MB, 2048MB

- Created functions with varying levels of dependencies:

- No dependencies

- Light dependencies (e.g., simple utility libraries)

- Heavy dependencies (e.g., data processing libraries)

3.3 Data Collection Process

AWS Lambda:

- Deployed functions using Serverless Framework

- Developed a Node.js script using AWS SDK to invoke functions

- Forced cold starts by re-deploying functions before each invocation

- Collected cold start times from AWS CloudWatch log insights

- Created approximately 40 different Lambda configurations

- Each configuration invoked approximately 20 times

GCP Cloud Functions:

- Deployed functions manually through GCP Console and gcloud CLI

- Triggered cold starts through new deployments

- Observed 3-4 instances of deployment for each configuration

- Collected execution times from GCP Cloud Monitoring

Azure Functions:

- Deployed functions using Azure CLI and Azure Portal

- Implemented functions for Python and Node.js

- Collected cold start times using Azure Application Insights

3.4 Variables Analyzed

- Programming Languages: Python, Node.js, Go, Java

- Memory Allocations: 128MB, 256MB, 512MB, 1024MB, 2048MB

- Dependencies: No dependencies, light dependencies, heavy dependencies

- Network Configuration: With and without VPC (for AWS Lambda)

- Region: Deployed in multiple regions to account for geographical variations

3.5 Data Analysis

- Utilized Python with pandas and matplotlib for data processing and visualization

- Performed statistical analysis to determine significance of various factors

- Conducted comparative analysis across platforms and configurations

4. Results and Analysis

4.1 AWS Lambda

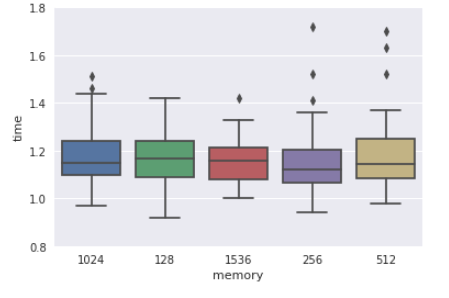

4.1.1 Impact of Memory Size

Observations:

- For functions without dependencies, cold start time showed little dependence on memory size.

- Functions with dependencies exhibited improved cold start times with increased memory allocation.

- The improvement was most significant when moving from 128MB to 512MB, with diminishing returns beyond 1024MB.

4.1.2 Programming Language Comparison

Observations:

- Python and Node.js demonstrated the fastest cold start times.

- Java showed the slowest cold start times, particularly for smaller memory allocations.

- Go performed well, especially for compute-intensive tasks.

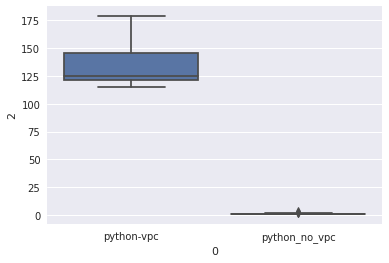

4.1.3 Impact of VPC Integration

Observations:

- VPC integration significantly increased cold start times across all configurations.

- The impact was more pronounced for functions with smaller memory allocations.

4.1.4 Dependency Analysis

Observations:

- Light dependencies had minimal impact on cold start times.

- Heavy dependencies significantly increased cold start times, especially for smaller memory allocations.

- Using AWS Lambda Layers for dependency management showed improved performance compared to including dependencies in the deployment package.

4.2 Google Cloud Functions

4.2.1 Language Comparison

Observations:

- Go functions demonstrated the lowest cold start times.

- Python functions were relatively slower compared to other languages.

- Node.js performed well, especially for functions with dependencies.

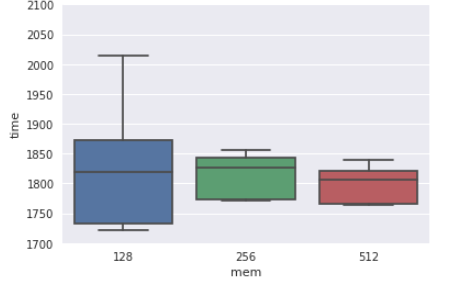

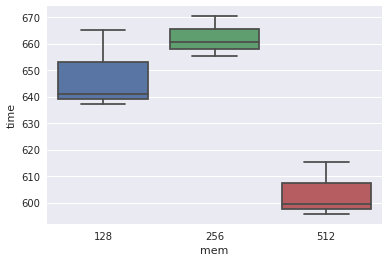

4.2.2 Memory Allocation Impact

Observations:

- Increasing memory allocation generally improved cold start times.

- The impact was more significant for functions with dependencies.

4.3 Microsoft Azure Functions

4.3.1 Language Comparison

Observations:

- Python functions demonstrated faster cold start times compared to Node.js.

- The difference was more pronounced for functions with dependencies.

4.3.2 Consumption vs. Premium Plan

Observations:

- Premium plan showed significantly reduced cold start times.

- The impact was more noticeable for functions with dependencies and larger memory allocations.

5. Discussion

5.1 Cross-Platform Comparison

Our study reveals significant variations in cold start performance across AWS Lambda, GCP Cloud Functions, and Azure Functions:

- AWS Lambda showed the most consistent performance across different configurations, with Python and Node.js being the top performers.

- GCP Cloud Functions demonstrated excellent performance with Go, making it a strong choice for compute-intensive tasks.

- Azure Functions exhibited competitive performance, particularly with Python, and showed significant improvements with the Premium plan.

5.2 Factors Influencing Cold Start Times

-

Programming Language: Interpreted languages like Python and Node.js generally showed faster cold start times compared to compiled languages like Java.

-

Memory Allocation: Increased memory allocation improved cold start times, particularly for functions with dependencies. However, there were diminishing returns beyond certain thresholds.

-

Dependencies: Heavy dependencies significantly increased cold start times across all platforms. Utilizing platform-specific optimizations (e.g., AWS Lambda Layers) proved beneficial.

-

Network Configuration: VPC integration in AWS Lambda introduced substantial delays, highlighting the need for careful consideration when implementing network isolation.

-

Execution Environment: Premium/dedicated execution environments (e.g., Azure Premium plan) showed marked improvements in cold start times.

5.3 Platform-Specific Insights

- AWS Lambda: Offers the most flexible configuration options, allowing fine-tuned optimization. The impact of VPC integration is a crucial consideration.

- GCP Cloud Functions: Excels with Go functions, making it an attractive option for performance-critical applications.

- Azure Functions: The Premium plan provides a compelling option for minimizing cold starts, especially for production workloads.

6. Conclusions and Recommendations

Based on our comprehensive analysis, we offer the following conclusions and recommendations:

6.1 General Observations

- Significant improvements in serverless function performance have been observed compared to previous benchmarks.

- Programming language performance varied across platforms:

- AWS and Azure: Python performed best

- GCP: Go performed best

- Adding dependencies via optimized methods (e.g., AWS Lambda Layers) improved performance.

- Network isolation features like VPC integration can significantly impact cold start times.

6.2 Recommendations for Reducing Cold Start Times

- Choose the appropriate programming language based on the specific platform and use case.

- Optimize memory allocation, particularly for functions with dependencies.

- Minimize dependencies and utilize platform-specific optimization techniques.

- Implement warm-up strategies to keep frequently used functions active.

- Consider premium/dedicated execution environments for production workloads.

- Carefully evaluate the need for VPC integration, considering its performance impact.

6.3 Best Practices for Serverless Function Design

- Design functions with a single, well-defined purpose to minimize complexity and dependencies.

- Implement effective error handling and retry mechanisms to mitigate the impact of cold starts.

- Utilize asynchronous processing where possible to reduce the impact of cold starts on user experience.

- Regularly monitor and analyze function performance to identify optimization opportunities.

7. Future Work

While this study provides a comprehensive analysis of cold start times in serverless functions, several areas warrant further investigation:

-

Long-term Performance Analysis: Conduct longitudinal studies to understand how cold start times evolve over extended periods and across platform updates.

-

Workload-Specific Optimization: Investigate cold start optimization techniques for specific workload types (e.g., data processing, API serving, batch jobs).

-

Emerging Serverless Platforms: Extend the analysis to include newer serverless platforms and edge computing environments.

-

Cost-Performance Trade-offs: Develop models to optimize the balance between performance improvements and associated costs in serverless architectures.

-

Advanced Warm-up Strategies: Explore and evaluate sophisticated warm-up techniques to minimize cold starts in production environments.

8. References

-

Manner, Johannes, et al. “Cold start influencing factors in function as a service.” 2018 IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC Companion). IEEE, 2018.

-

Jackson, David, and Gary Clynch. “An investigation of the impact of language runtime on the performance and cost of serverless functions.” 2018 IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC Companion). IEEE, 2018.

-

Cordingly, Robert, et al. “Implications of Programming Language Selection for Serverless Data Processing Pipelines.” 2020 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech). IEEE, 2020.

-

AWS. “AWS Lambda Developer Guide.” Amazon Web Services, Inc., 2021, docs.aws.amazon.com/lambda/latest/dg/welcome.html.

-

Google Cloud. “Cloud Functions Documentation.” Google Cloud, 2021, cloud.google.com/functions/docs.

-

Microsoft Azure. “Azure Functions documentation.” Microsoft Docs, 2021, docs.microsoft.com/en-us/azure/azure-functions/.

-

Baldini, Ioana, et al. “Serverless computing: Current trends and open problems.” Research Advances in Cloud Computing. Springer, Singapore, 2017. 1-20.